In June 2016, I bought a 3D printer on a whim, I only had one real concrete idea for something useful to print. But no worries, because there proved to be a website containing a few millions of free 3D models. Granted, the vast majority of models posted on it proved to be useless junk, but some were either very cool or truly useful. That site was Thingiverse. It was, and still is, the de facto standard for 3D printable model sharing. At that time, the site worked pretty well and had a very active and mostly friendly community. Questions would most often be replied to with useful advice, and if there was a problem with the website, there would at least be an acknowledgement even if the problem was not soon fixed.

The Good

Thingiverse used to be one of the main things that kept feeding my interest in 3D printing. Not just because of the new models coming in every few minutes, but also because of the community, and because it was pretty easy to share my own models, many of which started out as improvements upon someone else's. I am now the co-author of the “Flexi Rex with stronger links,” one of the models a present-day buyer of a new 3D printer seems likely to print as one of their very first attempts. I never anticipated this, I only improved upon an existing model because it broke way too soon when my grandson was playing with it. This is what I like about this community, one can easily take an existing model and improve it, and share this improvement for everyone to enjoy.

The Bad

Today however, things have changed for the worse. The turning point was somewhere in 2017, when one of the main moderators of the website, called ‘glitchpudding’, suddenly left. After this, it became much harder to get any response from whomever was responsible for maintaining the site. At the increasingly rare times that there was some kind of announcement, each time it was from a different person I had never heard of before, as if the previous one had been fired. This would not have been that bad if the website would have maintained its same quality level, but it did not. All kinds of annoying issues started popping up, like the website becoming very slow at times or throwing 500, 501, 502, … HTTP errors at random moments.

Complaining about this seemed to be of no use, because only rarely would there be a response from what might either be a Thingiverse employee or maybe just some random joker — there was no way to verify that whoever was replying on the discussion forums, was an actual Thingiverse / Makerbot employee, it was often a different username, more often without than with the “Thingiverse” badge.

Then it got worse: apparently the nearly invisible Oompa-loompas now running the site, were trying to make certain changes for… reasons. One can only guess when something has changed, because there is barely any communication when this happens. The most obvious sign of a change is an increase in the number of issues. Suddenly all photo previews were broken. Then suddenly they worked again, but any photo that was not in a 4:3 aspect ratio, would be shown distorted. This was not the case before: photos used to be letterboxed and/or cropped in reasonable ways. What was the point of this change? It only made the user experience worse.

Now at the end of 2019, there are finally some advance warnings or notifications that some issue is being worked on. For instance in December there was a site-wide banner telling that there would be “maintenance”. One would expect that this maintenance was aimed at improving the state of the website, but instead when it had ended, we had an extra HTTP error in the 500 range and random 404 errors as well, even on the main page. A website gives a very bad impression if its main page throws a 404 error.

Overall the website in its current state gives a strong impression of lack of professionalism, sometimes downright amateurism. It seems that whoever is maintaining it, does no more effort in testing their changes than trying something once and then assuming it will always work. I wonder if they have a testing environment at all. Often it feels as if they make changes to the production servers directly. The changes give an impression of lack of skill in developing a modern cloud-based website, rather it looks like trendy hipster frameworks are just being thrown on top of a rickety organically grown base without much vision. I am not saying the (obviously few) developers working on the site are amateurs, but the end result does give that kind of impression. This is likely to be merely because management does not allow the developers to spend enough time to implement things properly, so they are forced to only quickly hack things together.

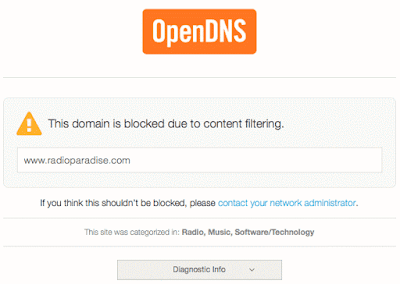

These are all ‘feelings’ and ‘impressions’ due to the total lack of communication. Only from the recent 504 error pages I could see for instance that they had either thrown ‘openresty’ into the mix, or had already been using it but had now broken something about it. There has been no announcement for this or an explanation why.

I have created my own issue tracker on GitHub just to make all the most obvious problems with the website more visible, in the hopes that this would help the maintainers to decide what to fix next, but it seems to be completely ignored.

The Ugly: my guess at what is going on here

In case you didn't already know, Thingiverse is owned by Makerbot and since 2013, Makerbot is a subsidiary of Stratasys, a company that already marketed 3D printers way before the big 3D printer boom started around the year 2010. Makerbot has changed from a small company selling affordable open source printers, to a big company selling rather expensive walled-garden machines aimed at the education market. I guess most of the original enthusiastic team wanting to change the world (like glitchpudding) have either been fired and replaced by people only interested in milking profits from whatever looks vaguely promising without actually caring about it, or they have transformed into such people themselves.

Thingiverse was part of the original vision of making 3D printing affordable for the home user, a vision that does not really fit with Stratasys, the company selling industrial machines at industrial prices. I guess that at every moment since 2013 when a decision about Thingiverse had to be made, the decision has been biased towards gradually sunsetting the website. Nobody at those companies seems to understand the value of this huge playground that encourages anyone to buy, experiment with, and get familiar with 3D printers. It doesn't matter that no beginner will immediately buy a horribly expensive Makerbot or Stratasys printer: the mere fact that there is a low threshold to gain experience with 3D printers, will increase the chance that these same tinkerers will later on generate profits for those companies. That however is probably way too much thinking-ahead for the average marketeer who has been brainwashed to always take greedy short-term decisions and lodge themselves into a cozy local optimum.

What Really Is Going On

It contains a part about Makerbot's presence on the Formnext 2019 exhibition. The author had the chance to talk to Jason Chan, who is responsible for Thingiverse. Many of my suspicions are confirmed: only two developers are assigned to the site (and I guess only part-time), and the company greatly underestimates the importance of Thingiverse. There seems to be some commitment to improve it, but again it looks as if the ones holding the bag of money do not share this commitment… Still no concrete indication of what will actually happen to the site.

“But it's free!”

Every time someone posts a complaint about the broken state of the website on the Thingiverse Group forums, there will be replies in the vein of: “but it is free, you have no right to complain.” I disagree. There is no such thing as a free lunch. Everyone who uploads content to the website, somehow invests in it. Some more than others, depending on how much effort they put in crafting the presentation and documentation of their Things. I have invested quite a lot, with about 120 published Things, each with pictures and an extended description. What I am now getting in return, is a pile of issues that make it harder to upload and edit Things, and I have no idea where the site is heading because of the lack of communication from the part of the maintainers. This lack of communication and lack of care to properly test each change, feels very disrespectful, even though only in an indirect manner. It almost makes me feel like an idiot for having put all this effort in my uploads during the years I have been on the site.

There are a few particular users on the Thingiverse groups who will react religiously against any complaint, one of whom has a pretty apt username given his writing style, which makes it seem as if he is drunk (he probably just is). Ignore them, because they are either trolls feeding on the anger, Makerbot employees paid to hold a denial campaign, idiots, or all of the above. None of them upload much of anything, therefore they don't even have any ground to stand on with their claims that the site works perfectly fine.

The content creators are the only reason of existence for Thingiverse. These creators deserve a little more respect than being ignored and being handed increasingly cumbersome tools to upload their content, without any explanation of what is being changed about the website, when, and why. There is no excuse for such poor communication in this era with so many different digital communication methods (and no, Twitter is not really a good communication method). I know it can be done better, because I was pretty content with how the site was maintained and how changes were communicated when I joined it in 2016. I have the feeling that the main breaking point for the website, was when the aforementioned glitchpudding left somewhere in 2017. It seems to have gone downhill ever since. I am not the one who demands infinite progress in everything, but I do expect that when something is good, people do the effort to keep it good.

Solutions, alternatives?

Obviously any sane person witnessing such evolutions on a certain website, would start looking for an alternative. That however proves to be a big problem with Thingiverse: there is no real alternative. I have looked at some other sites, but Thingiverse's biggest trump card is its sheer library of things. No other site comes close to it, therefore it doesn't matter if Makerbot only keeps Thingiverse at a level where it is just usable enough, people will keep coming for the content.

YouMagine looks decent at a first glance: its interface is the most similar to Thingiverse's I have found so far. It is owned by Ultimaker, alhough this is not explicitly mentioned on the site's main page. After trying it out however, it is obvious that YouMagine suffers from the same lack of maintenance, or worse. The ‘blog’ part has not been updated in ages, the featured things remain the same for a very long time, and reporting spam is impossible because the ‘report’ link only points to a dead support e-mail address. The 3D model previews and the ‘assembled view’ feature are mostly broken. Links and bold text do not work in the description text, which is incredibly annoying (I can imagine that they disabled links due to the spam, but bold text: why?!)

The site still runs, but it looks like a ghost ship. Maybe the only good thing about this is that if nobody changes anything about it, they also cannot break anything about it…

There are other sites like MyMiniFactory, against which I have grown an aversion due to the apparent shills promoting it on Thingiverse groups as the best thing since sliced bread. There is also Cults3D but just as with MMF, I find that there is too much emphasis on hiding content behind a paywall.

I have no concrete ideas for a solution. The best thing would be if a new website would be constructed by a community that does care about it, not tied to one particular manufacturer, and with a strategy to keep the community and website alive in the long term. Ideally, somehow the Thingiverse library would then be migrated to this site, but that is optional. I have made backups of all my uploaded models and their descriptions and photos, and I will happily re-upload them to a new website that is worth it. Maybe it would be better this way because to be honest, I estimate 80% of all Thingiverse models to be junk that would better be garbage collected. Starting from a clean slate might be better…